The taste frontier

Why human-in-the-loop isn't optional for agentic AI

AI natives are familiar with this moment: when AI starts deviating from what you actually want to happen. The code works, but the design isn’t what you pictured, or the content is starting to read like corporate jargon rather than your preferred tone of voice. It’s drifted below the taste frontier.

Adding human taste into the loop isn’t just a feature of agentic AI; it’s the constraint and value lens that makes AI systems worth shipping in the first place. It ties together design, liability, and the probabilistic nature of language models.

Where do humans apply taste to agentic AI?

Let’s start by thinking about decision-making in a typical agentic AI system.

First, we communicate the task we want completed through chatbots, email messages, command lines, and other means. Then the AI gets to work making a bunch of decisions itself and presents us with the results. Finally, we decide on how to use them.

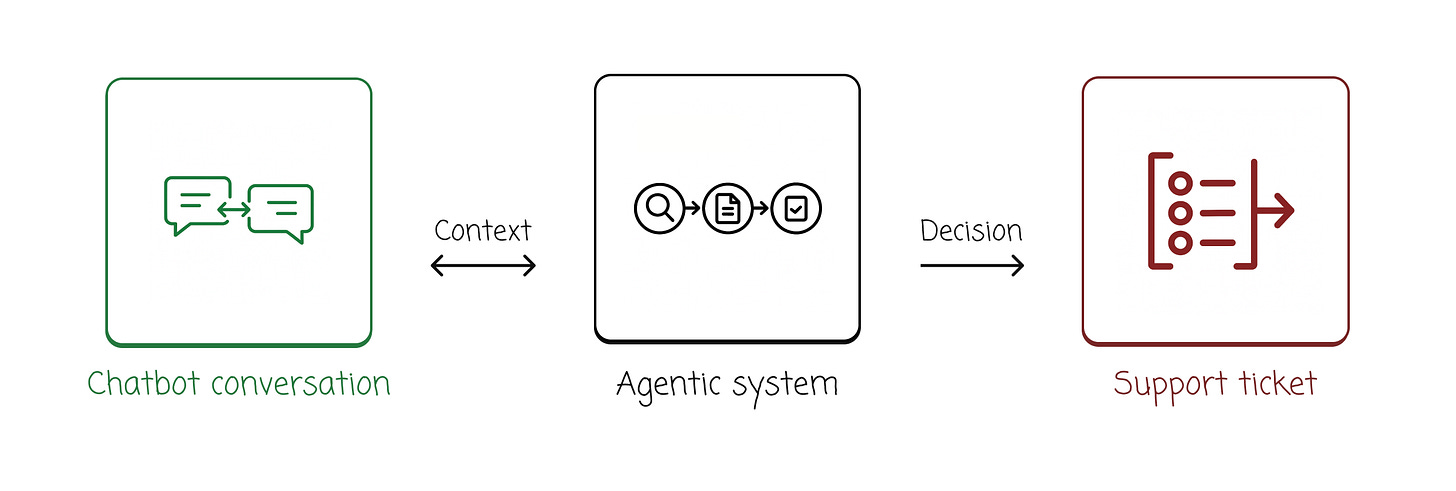

For example, imagine you’re reporting a hardware incident using a chatbot powered by an agentic AI system that raises support tickets.

There are two places where humans affect the final judgment: the customer in their conversation with the chatbot, and the human support agent reviewing the generated ticket.

Humans apply their taste to both the input and the output.

But didn’t humans build the agentic system itself?

Maybe AI wrote the code, but a human chose how the agentic system itself works.

The human-centred view of AI systems raises a bigger question:

Is full autonomy a mirage?

In 2012, the US Department of Defence was tasked with defining levels of autonomy in its systems. Their conclusion:

Cognitively, system autonomy is a continuum from complete human control of all decisions to situations where many functions are delegated to the computer with only high-level supervision and/or oversight from its operator.

Even in what people often consider the most autonomous AI — robots — human judgment determines the value.

How does a robo-vacuum know where to clean? A human decided what “clean” means, which areas matter, and what obstacles to avoid.

AI decides the actions, but the operators’ decisions determine the purpose.

Agency of purpose ≠ Agency of execution

Humans choose what.

Agentic AI chooses how.

And because AIs can perform many tasks at superhuman speed, they’re able to quickly generate multiple options. This brings the user experience of human-AI interactions into focus.

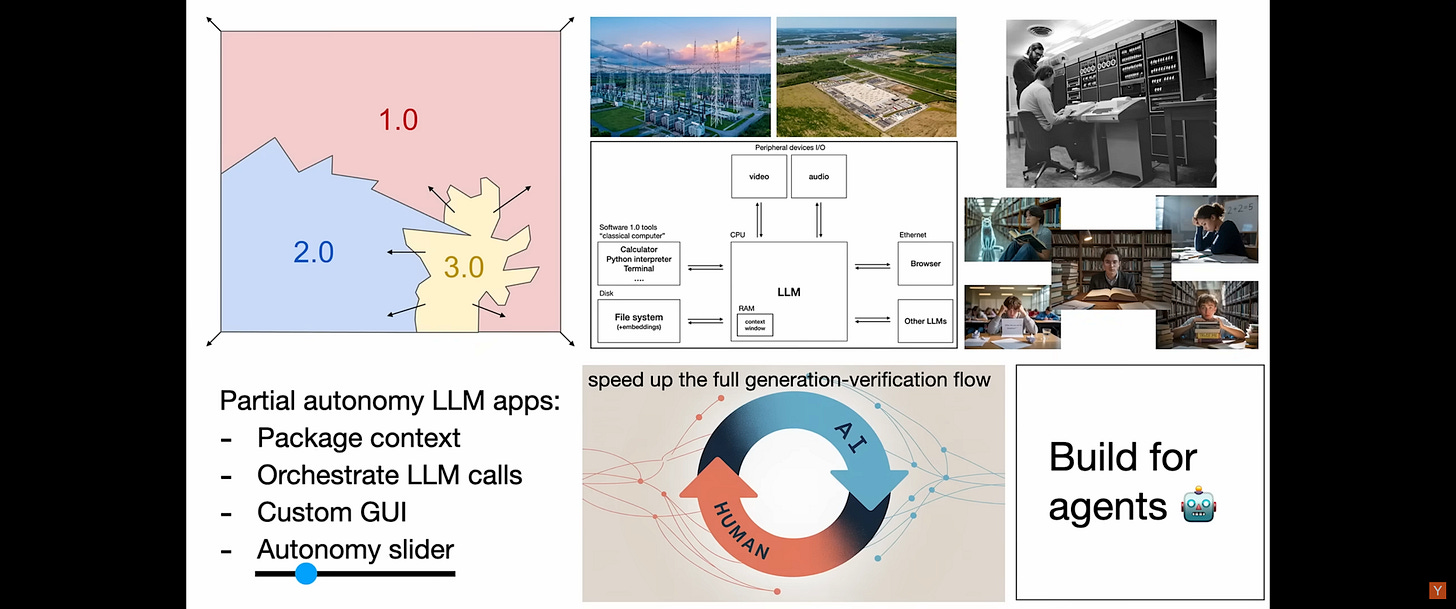

Andrej Karpathy suggested there could even be something like an autonomy slider in the age of Software 3.0. He also emphasised the back-and-forth nature of AI generation and human verification.

Taste as first-class design constraint

Many people still treat human-in-the-loop as a safety feature of AI systems. But rather than something to eventually be engineered out, I’d argue it’s a primary constraint that makes these systems valuable in the first place.

Think about what you’re injecting into the AI with your taste:

awareness of context you consider important

how much risk you’re willing to tolerate

cultural considerations

quality standards

what you value

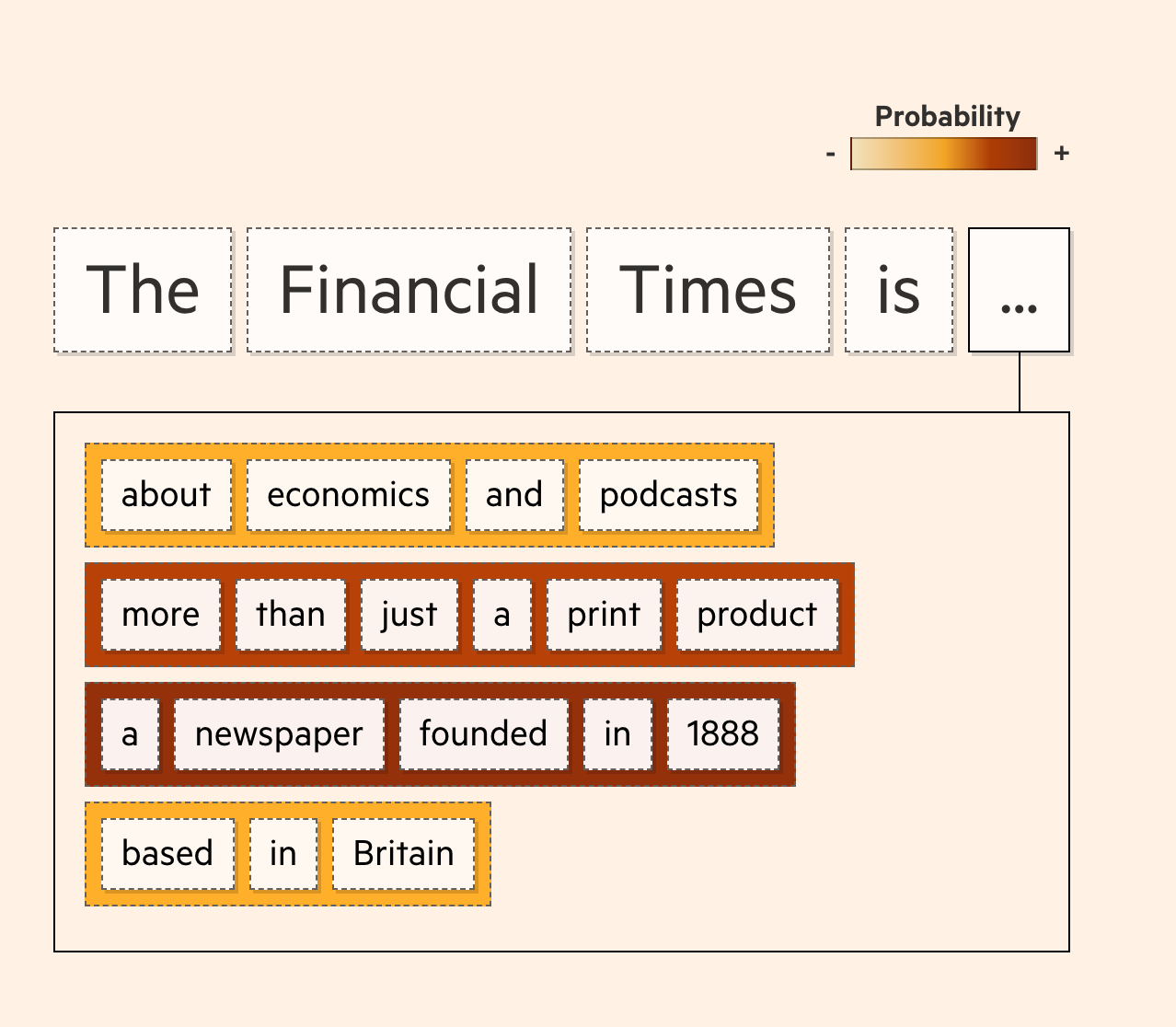

Language models’ non-determinism is fundamental. Each output originates from a probability distribution.

Someone needs to evaluate whether the result from an agentic AI system powered by language models aligns with intent, context, and purpose. The variability of outputs isn’t necessarily a limitation to overcome — it’s why human taste remains irreplaceable.

Historical validation

In my previous post, I wrote about how expert systems like DEC’s XCON/R1 delivered huge benefits while achieving 90-99% accuracy throughout its lifetime.

The system succeeded because it accepted human-chosen components, addressed a clear, human-defined business need, and produced schematics for humans to evaluate and build upon. Human oversight was never optional.

Liability as a forcing function for taste

Software can’t be sued, so neither can AI.

Every deployed system has a human principal behind it. Even if we reach the point that AI runs organisations with AI employees, a human will have kicked off the process. (It’s turtles all the way down with a human at the bottom). The human signing off needs to trust that their judgment has been encoded correctly in the system’s output.

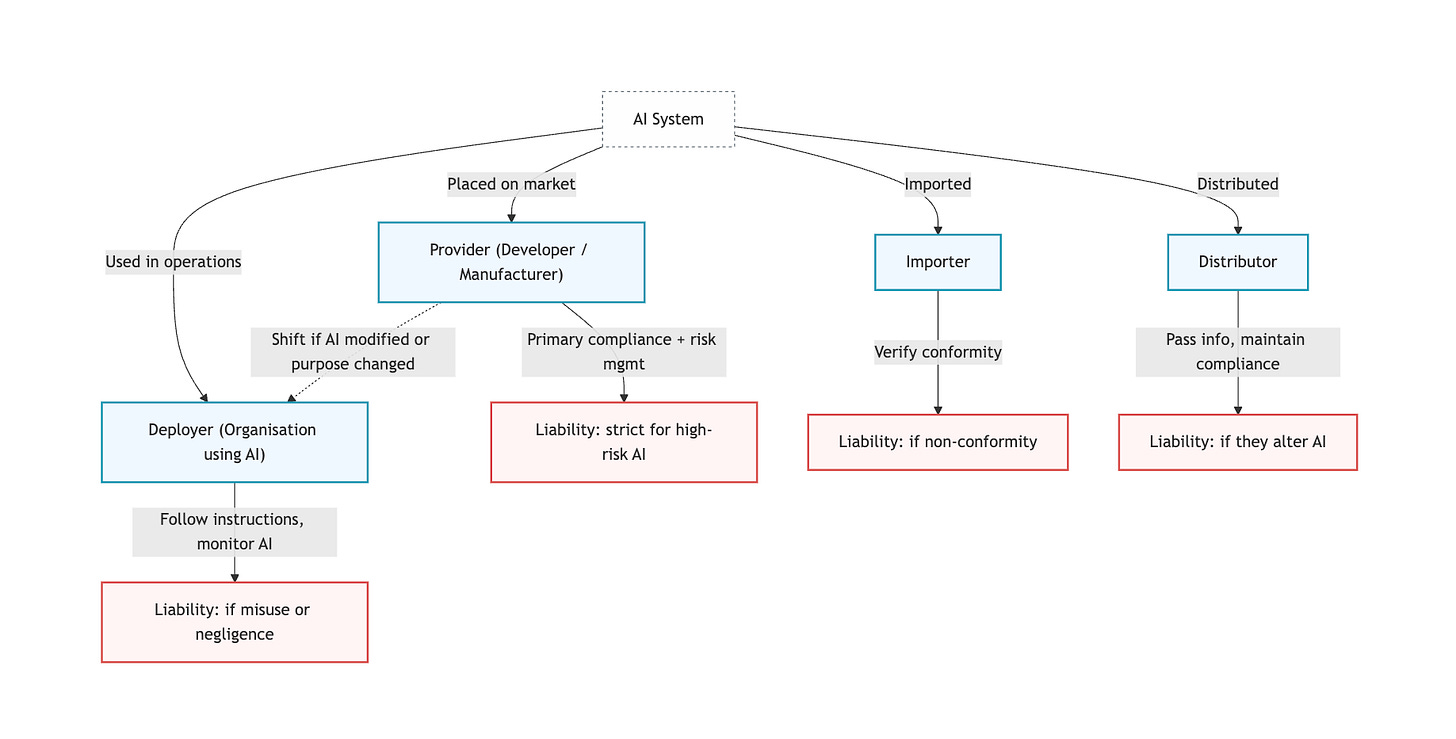

This liability framework doesn’t show signs of going away anytime soon. In the EU AI Act, the relevant parties are producers, deployers and distributors.

Responsibility ultimately falls to accountable humans.

So, can we afford to be less anxious about automation?

Making taste explicit allows creative, human-defined processes rather than replacement.

But let’s be real: there’s no reason to expect that automation will always be complementary. Previous waves of automation didn’t just augment workers; they increased the polarisation of employment and wages.

Since the release of ChatGPT, people have been repeating the line “AI won’t replace you, the person using AI will”. That might be true. We refer to the people using AI to automate tasks that would otherwise have been part of their work as being augmented.

But is it cope?

The media loves to ask the CEOs of the AI labs about their timeline predictions for the complete automation of jobs. But AI is being deployed and generating $billions through tight human-feedback loops.

Sergey Levine recently spoke about how this thinking is being applied to make autonomous robots market-viable on the Dwarkesh Podcast:

What I can tell you is this. It's much easier to get effective systems rolled out gradually in a human-in-the-loop setup. Again, this is exactly what we've seen with coding systems. I think we'll see the same thing with automation, where basically robot plus human is much better than just human or just robot. That just makes total sense. It also makes it much easier to get all the technology bootstrapped. Because when it's robot plus human now, there's a lot more potential for the robot to actually learn on the job, acquire new skills.

These types of AI are inherently collaborative — not adversarial.

Whatever automation looks like, I’d bet we’ll be building AI systems that are human-dependent for the foreseeable future. This allows the people creating and using agentic AI today to adapt and grow with the technology, but displacement remains a real risk.

Final thoughts

No amount of computation can bridge the divide between “this is a possible solution” and “this is the right solution for us, now, in this context”. This isn’t some temporary limitation of current AI. It’s a logical gap.

All the agentic AI that I’ve built and regularly use have been designed for human-in-the-loop at their core, not as an afterthought. Meanwhile, the companies that win with AI aren’t trying to eliminate human judgment — they’re learning to encode and scale it effectively. Embracing AI coding tools and augmenting employees is how so many new companies are achieving record ARR.

Instead of measuring the % of automation, success becomes a measure of taste amplification.

We need new interfaces for this new age of software. These systems should enable users to encode their preferences, degrade gracefully from the taste frontier, and make it easy to adjust and verify the outputs.

If you’re interested in learning how to build these taste-aware agentic systems yourself, I walk through the practical implementation in my Udemy course on building winning agentic AI with Python. It covers the hands-on side of this topic.