The AI that saved $25 million, failed catastrophically, then came back

Expert systems 2.0: Agentic AI for human-in-the-loop augmentation

Expert systems

Expert systems were a type of rules-based AI that automated costly tasks.

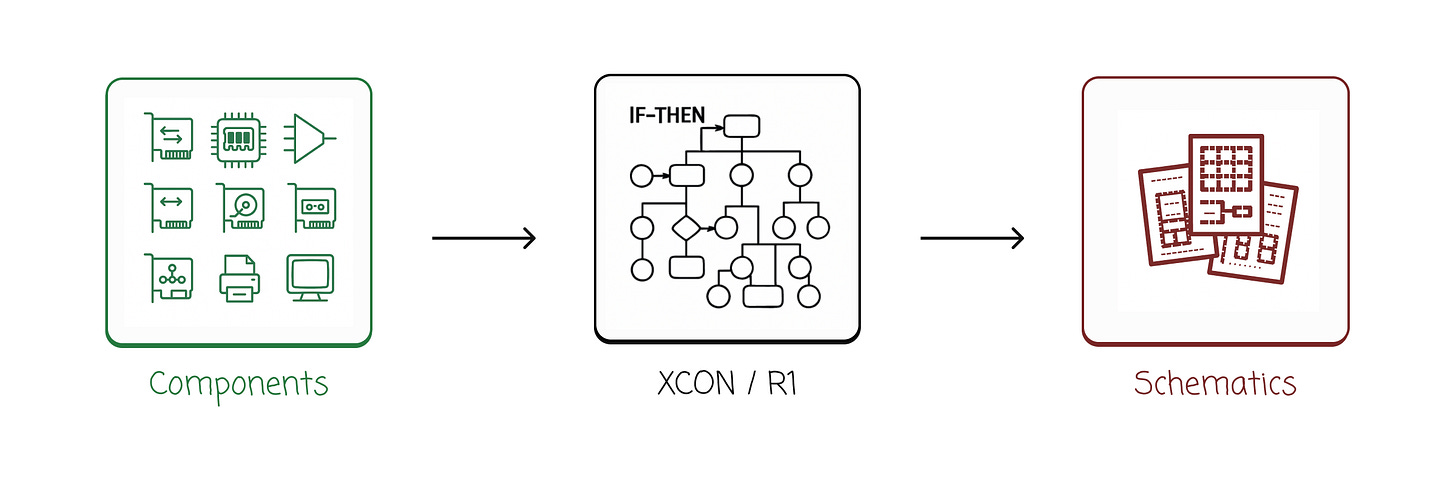

A blockbuster expert system is XCON, created in 1978. XCON, also known as R11, reportedly saved DEC (now part of Hewlett-Packard) $25 million per year in 19862.

How?

Engineers had to turn a list of components from a customer order into a physical computer. The only way they could have known how to assemble these adult-sized “minicomputers” was by physically constructing them. Building them by trial and error meant it took 10-15 weeks to fulfil an order.

XCON, the AI system that was essentially a few thousand hard-coded rules, generated schematics with 90-99% accuracy throughout its lifetime, reducing order fulfilment time to 2-3 days.

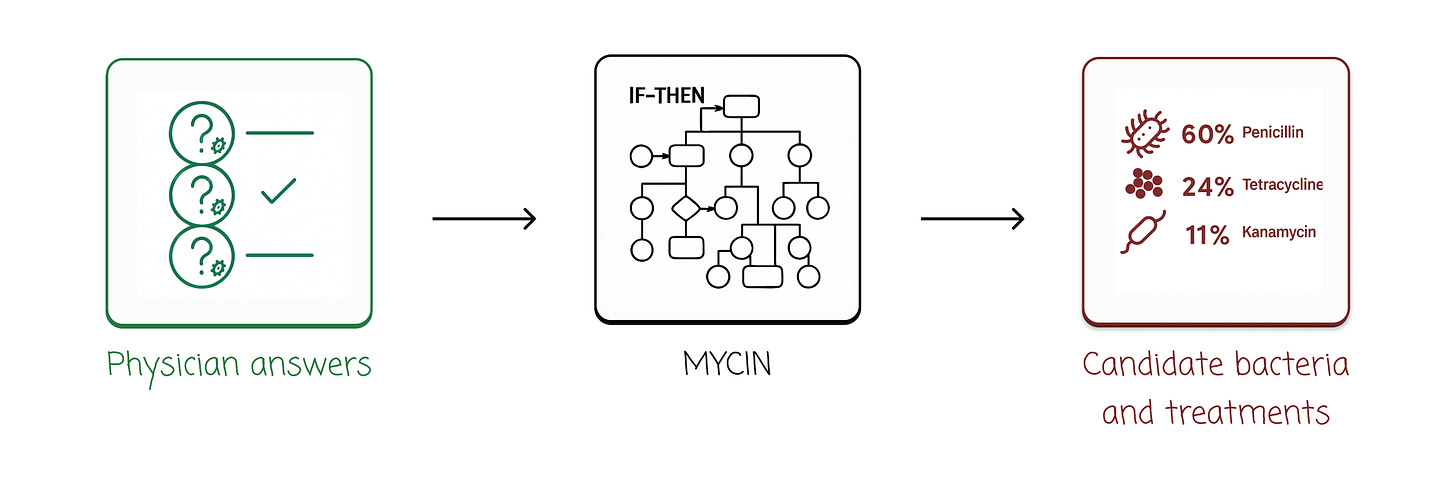

Another system from the early 1970s similarly outperformed human physicians at diagnosing and treating bacterial infections3.

This diagnostic tool was never deployed due to non-technical issues (not much has changed!). And separately, XCON eventually failed after growing to more than 10,000 rules that could no longer be maintained — a victim of the infamous AI winter.

Today’s agentic AI systems aren’t built with hardcoded rules — they’re built with language models. However, the core pattern for designing successful systems remains the same: build for the humans in the loop.

How AI can augment humans today

Customer support agents

Enterprises recognise the value in scaling their ability to engage with customers 24/7 across all the languages of their target markets, deploying AI agents that handle web chatbot conversations, emails, and phone calls.

Even a simple chatbot living inside a company’s secure web portal could act as a first responder to incidents, handling simple issues, prioritising issues, and routing tickets to the appropriate team — all while allowing escalation to a human agent at all times. This would enable human support agents to scale their work in a way that would otherwise be much more costly to achieve.

An example: Sierra — a customer support AI startup — reported to have reached $20 million ARR as of October 2024.

Coding agents

Some of the most valuable agentic systems have very tight human-AI feedback loops.

I’m unsure whether my coding habits are atypical, but when I use coding agents, I try to watch every line of output while it’s in progress. (It feels like micromanagement 😇)

These AIs ask for permission before taking risky actions and document every action they take. Some, like Claude Code, allow interruptions and real-time messaging while still completing tasks.

As the AI thinks out loud, explaining and documenting every action it’s considering, I learn what I’ve underspecified and catch potential issues before they compound. This style of collaboration feels like it gives me the AI’s speed while I maintain oversight over the system being built.

Coding agents aren’t superhuman yet. Today, and into the foreseeable future, coding AIs are governed in the same way as human programmers: an expert reviews the changes. This seems unlikely to change, especially in high-stakes domains and systems.

An example: Anysphere — the company behind Cursor and its coding agent — reported $500 million ARR as of June 2025.

Final thoughts

The pattern here isn’t about replacing human expertise — from XCON’s $25 million in savings to today’s agentic systems, the highest-value AI opportunities are about augmentation.

One thing I've noticed about today’s AI systems is their conversational nature. The questions are adaptive, not hardcoded. The strategy unfolds in real-time.

I’d argue that agentic AI that delivers real business impact always maintains tight human oversight while automating the most tedious and repetitive tasks that slow down decision-making, rather than the whole job. We haven’t lost the lessons of expert systems; we’ve just found better ways to implement them.

Not to be confused with the blockbuster Chinese language model, DeepSeek R1!