Adding reasoning to large language models

6 (+1) ways to engineer reasoning above LLMs to improve their capabilities

A non-exhaustive overview of engineering techniques for adding reasoning "above" language models:

Chain-of-thought

Self-consistency with chain-of-thought

Tree-of-thoughts

Graph-of-thoughts

Backtracking

Multiagent debate

Mixture of experts (bonus)

Let’s look at them from a high level.

Chain of thought ⛓

A good starting point for thinking about reasoning above the language model is chain-of-thought (CoT) which was introduced in Jan 2022.

CoT improves LMs.

It encourages them to solve tasks by prompting them to generate and follow a logical sequence of thoughts. This is especially helpful when tasks require a level of complex reasoning beyond simple prompting.

But there are many ways to go beyond "vanilla" CoT.

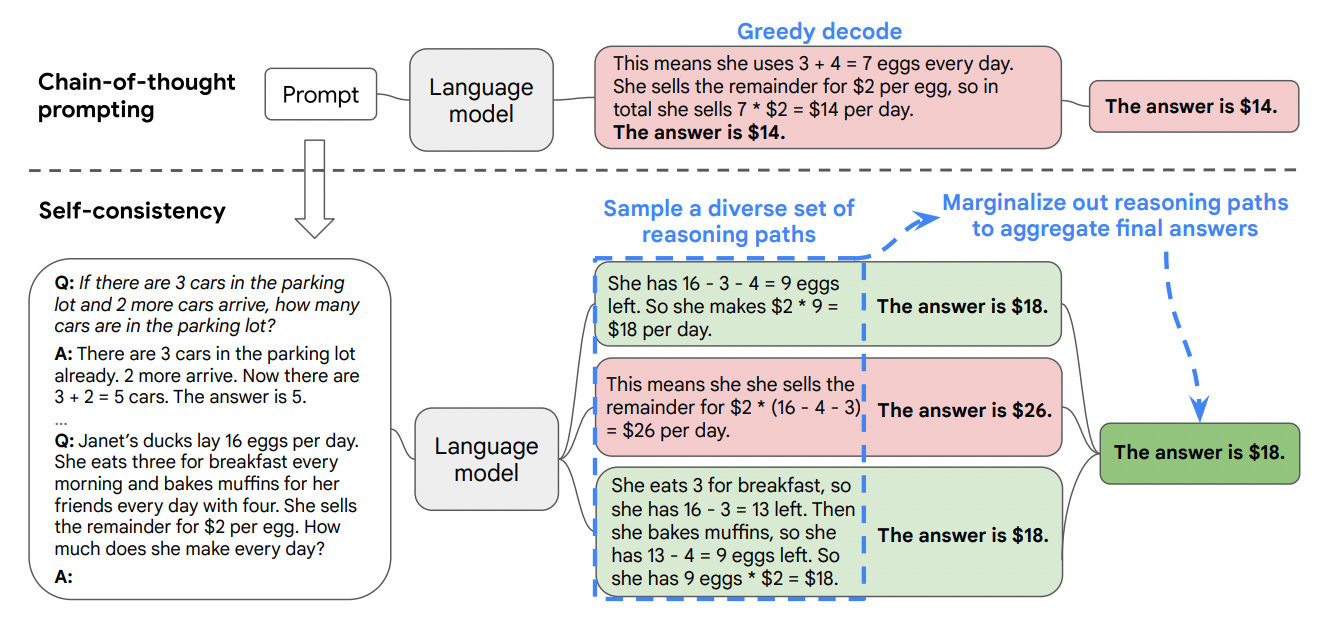

Self-consistency with chain-of-thought

In the follow-up to the CoT paper, COT-SC introduced a way to add diverse reasoning paths on 7 Mar 2023.

COT-SC boosts performance.

It improves logical reasoning tasks like arithmetic without requiring supervision.

A big bonus is that it gives us a transparent view of its decisions, which is great for interpretability.

Self-consistency hints that it's all about diverse reasoning.

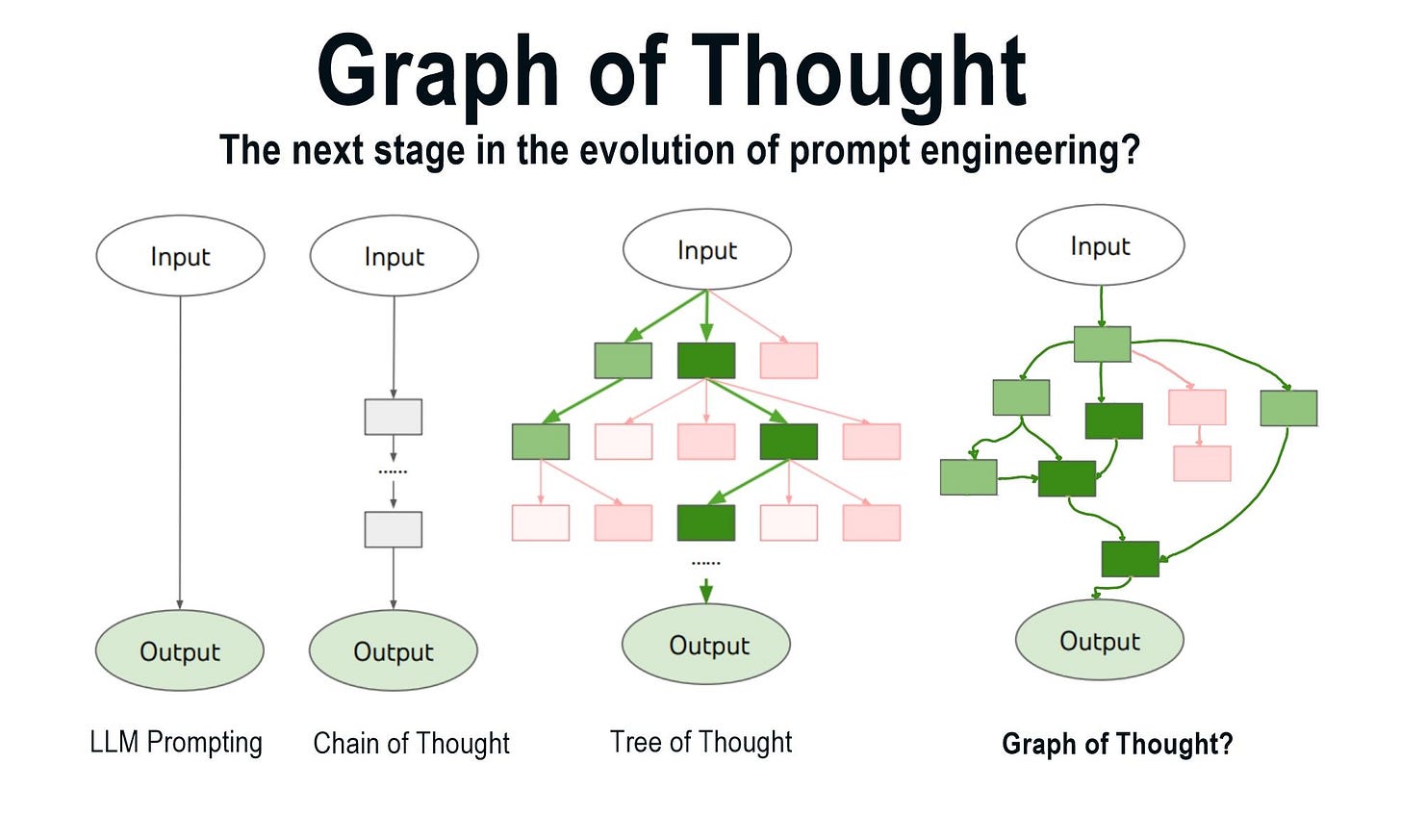

Tree of thoughts 🌴

The Tree-of-Thoughts (ToT) paper was published on 17 May 2023 and suggests a way to go further than self-consistency.

ToT is fascinating.

It was designed to provide a way for the system to self-evaluate, using foreword planning and backtracking.

The paper explains that these are features of System 2 from the prominent dual-process theories in human psychology.

Graph of thoughts

Just 9 days after the ToT paper, we saw GoT published as another approach to improving Chain-of-Thought reasoning on 26 May 2023!

GoT feels intuitive.

You can think of it as a 2 stage framework:

• generate rationales as graph nodes ፨

• infer answers based on the best paths

You can imagine it as an improvement to the ToT model, as Tony Seale did on LinkedIn:

Backtracking

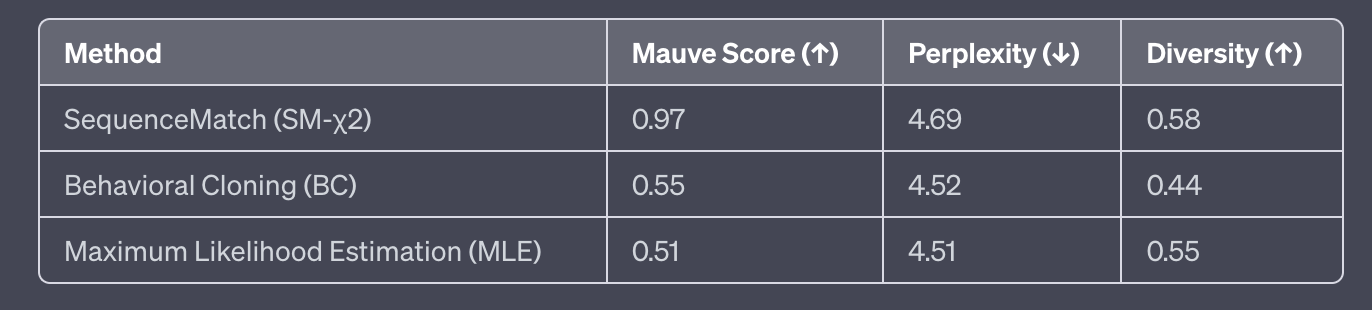

On 8 Jun 2023, researchers behind the SequenceMatch paper first proposed “this one weird trick”:

Train the models to use backspace.

Backspace is all you need? 🔙

The results of this limited experiment are both surprising and thought-provoking.

If a language model evaluates its previous output and has the ability to backtrack, how different is it from creating multiple paths of reasoning?

Multiagent debate

Backtracking to 23 May 2023, researchers wrote about a more dynamic reasoning system, where thoughts are built on by discourse.

It's all about getting the agents to exchange and develop ideas in a structured fashion.

Multiagent debate sounds like democracy 🦅

This method really shines in situations where the answer isn't black and white and judgement calls need to be made.

Apparently, debate boosts information filtering AND math accuracy.

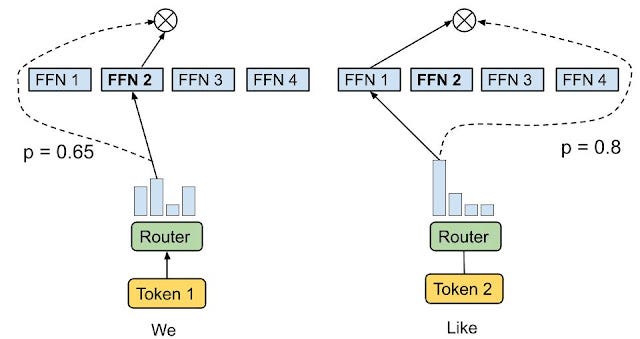

Mixture of experts (bonus)

Let's briefly detour into ensemble learning.

Since the 90s, various ways have evolved to combine specialised (expert) models.

An intuitive example is MoE with routing, shown in the image from a Google Research article.

MoEs are a product of the times.

For example, a 1991 paper frames adaptive mixtures of local experts as an associative version of competitive learning, which was popular in those days.

George Hotz recently outed GPT-4 as MoE. I'd guess the routing kind, but who knows?

Final thoughts

The starting point to building reasoning systems, including agents, is capable language models.

Implementing higher-level reasoning at the code level has never been easier with tools LangChain and OpenAI’s new built-in function calling. There are countless ways to connect inputs and outputs to each other and to other interfaces.

So we have the mechanisms to build any kind of reasoning imaginable using thoughts as the unit. You might have noticed how many of these reasoning methods are based on human-like reasoning. A key question is whether human-like reasoning is the best target for building new AI systems.

The prompt engineering community seems to have taken a liking to neuroscience, with Andrej Karpathy recently recommending that attendees at an AI agent hackathon read the book Brain and Behaviour.

Conjecture is an AI research company on the side of the extinction-risk argument that likely condemns the idea of such a hackathon. But, interestingly, Conjecture’s approach to alignment involves emulating the logic of human reasoning, which they call Cognitive Emulation.

I’m somewhat sceptical that it’s easier to build human-like reasoning than it is to build a digitally-native reasoning system. Not for any particularly complex reason. We don’t really understand the human mind that well.

When it comes to neuroscience, it feels like we hear the same thing every year or so:

“We used to think Y about the brain, but it turns out to be more complicated and interconnected than we thought” 🫠

FlyWire recently released a fully-mapped connectome of the fruit fly, mapping their neural pathways. A HUGE milestone that could one day allow researchers to model their reasoning. It’s also the precursor to fully mapping the human brain’s connections too. But the fruitfly’s brain consists of just 100,000 neurons compared to ~86 billion in humans. So we still have a long way to go.

But what’s the alternative to simulating human reasoning today?

Well, I think we should continue building systems iteratively, starting simple and gradually adding complexity. If we’re using software to reason above the language models, then it will come with built-in monitoring. Current AI agents are very limited in their capabilities, but they work well in specialised tasks, such as developing code, making tools and using tools.

Talking about human-like thinking is helpful as a concept, but AI agents will probably evolve to look very different. This image shared by @swyx obviously isn’t meant to be taken literally, but it’s helpful for imagining just how we could compartmentalise the components of an autonomous agent’s reasoning capabilities.